Using SYCL* for GPU Offload of Monte Carlo Based Financial Services Workloads

The Need for More Flexible Parallel Compute Power

The Basel Committee on Banking Supervision* (BCBS) forecasts a four- to twentyfold increase in compute demand due to the increasing need for financial risk mitigation measures. These include advanced market prediction algorithms and risk simulations to understand better asset volatility associated with climate change, supply chain disruption, and geopolitical changes.

Software developers in the Banking and Financial Services Industry (FSI) rely on high-performance computing (HPC) environments with GPU-based acceleration to implement these risk simulation models. The challenge they face is that the wide deployment of these workloads makes it paramount that they can be run on a large variety of hardware platform configurations. Before the Unified Acceleration (UXL) Foundation’s efforts and the ever-increasing popularity of SYCL*, they faced substantial obstacles and inefficiencies caused by the need to port and refactor code every time a workload had to be deployed in a new environment.

The Monte Carlo method is a well-known method in finance, relying on highly performant random number generation as seeds for its simulation scenarios. It is mainly used for option pricing as the expected return on investment (ROI) is often too complex to compute directly, especially with exotic options.

American and European Options are two widely used Monte Carlo simulation methods to predict the probability of various option investment outcomes.

Since the introduction of the C++ with SYCL-based oneAPI Math Kernel Library (oneMKL) Interfaces in 2020, this component of the oneAPI specification and open source extension of the Intel® oneAPI Math Kernel Library provides Data Parallel C++ interfaces for Random Number Generators (RNG) routines implementing commonly used pseudorandom, quasi-random, and non-deterministic generators with continuous and discrete distributions.

Just as some other oneMKL function domains, as a part of its implementation, oneMKL RNG includes:

- Manual offload functionality (Random Number Generators Host API)

- Device functionality as a set of functions callable directly from SYCL kernels (Random Number Generators Device Routines).

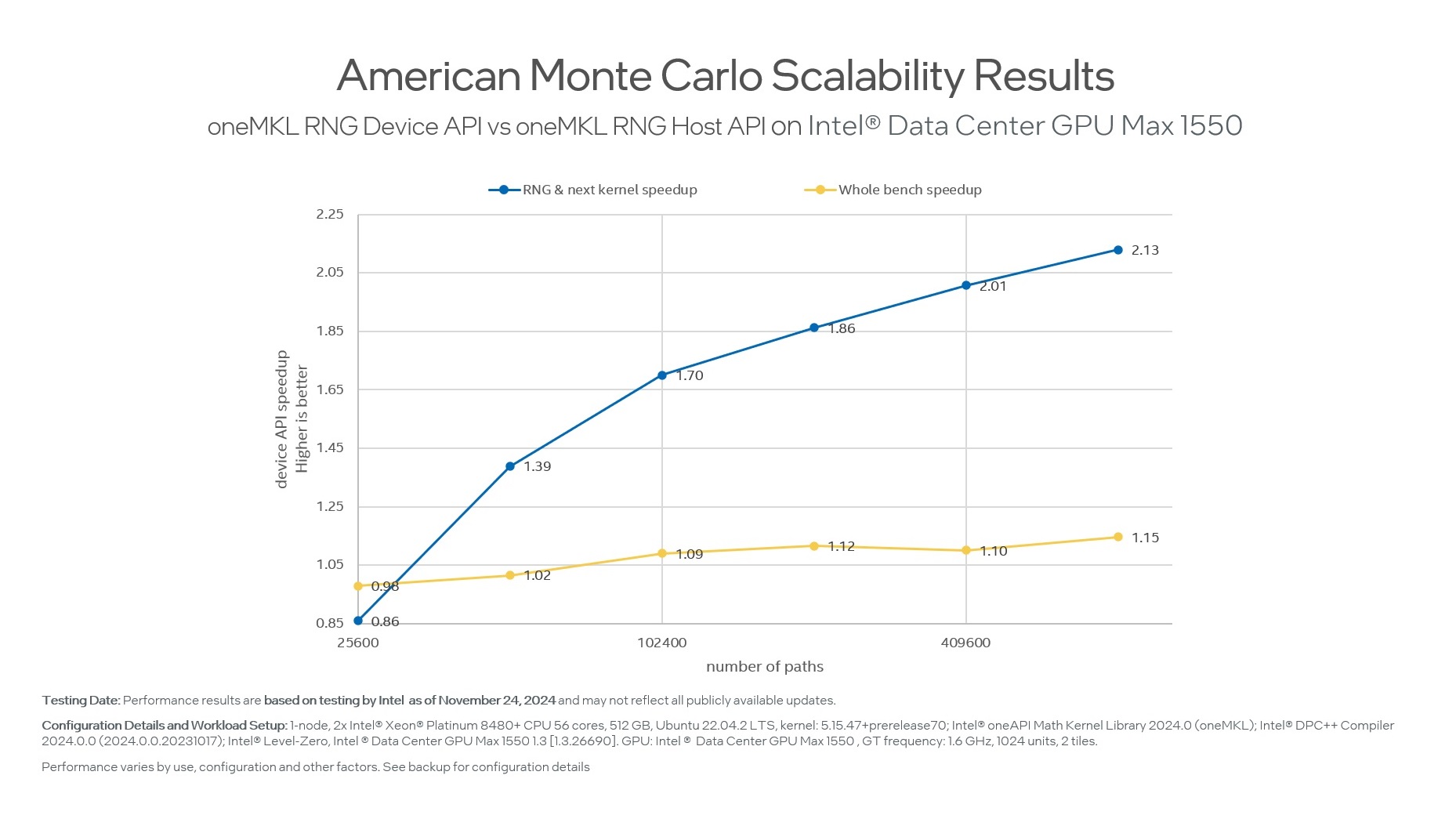

We will show how oneMKL Random Number Generators (RNG) Device API helps you significantly increase the computation performance on Intel® Data Center GPUs by a factor of 2, compared to the Host API implementation on the same hardware.

In addition, we will use American Options Pricing using the Monte Carlo algorithm as an example to demonstrate migrating financial workloads from proprietary CUDA code to the open, multi-platform SYCL programming model using SYCLomatic (or the Intel® DPC++ Compatibility Tool).

Results were achieved on Intel® Data Center GPU Max 1550 with the oneAPI 2024.0 release.

RNG Device API Basics

The main purpose of device interfaces is to make them callable from SYCL kernels. It brings an essential performance boost because submitting time may greatly affect the overall execution time of the application. The idea is to get random numbers on the fly and process them in the same kernel without paying for global memory transfer. For example,

Host API:

auto engine = oneapi::mkl::rng::mrg32k3a(*stream, 1ull);

oneapi::mkl::rng::generate(oneapi::mkl::rng::gaussian<double>(0.0, 1.0),

engine, n, d_samples);

sycl_queue.parallel_for(

sycl::nd_range<3>(sycl::range<1>(n_groups) * sycl::range<1>(n_items),

sycl::range<1>(n_items)),

[=](sycl::nd_item<1> item_1) {

post_processing_kernel(d_samples);

}).wait();

Device API:

sycl_queue.parallel_for(

sycl::nd_range<3>(sycl::range<1>(n_groups) * sycl::range<1>(n_items),

sycl::range<1>(n_items)),

[=](sycl::nd_item<1> item_1) {

oneapi::mkl::rng::device::mrg32k3a<1> engine_device(1ull, n);

oneapi::mkl::rng::device::gaussian<double> distr(0.0, 1.0);

double rng_val = oneapi::mkl::rng::device::generate(distr, engine_device);

post_processing_kernel(rng_val);

}).wait();

Since the host interface call takes at least one SYCL kernel, it is easy to see that the number of kernels required for the implementation when using the Device API is smaller than the Host API. The RNG Device API is also available as a part of oneAPI Math Kernel Library (oneMKL) Interfaces, an open-source project that can be found on GitHub.

Let us consider American and European Monte Carlo workloads separately.

American Monte Carlo Options Pricing Model

To run this benchmark on an Intel® GPU, we took the original code from the NVIDIA* Developer Code Samples GitHub repository. We then migrated the native CUDA* GPU code to SYCL using the SYCLomatic Open-Source Project (SYCLomatic). This tool is included in the Intel® oneAPI Base Toolkit or available from GitHub under the Apache* 2.0 license. SYCLomatic allows us to port the original CUDA code to SYCL and migrates about 95% of the native code automatically to SYCL code.

Please find step-by-step instructions for the migration of the American Monte Carlo Options Pricing Model example source code to SYCL, and inspect the SYCL enabled example source code project in the GitHub repository.

To finish the process, we made some manual code changes and tuned it to the desired level of performance for our target architecture.

Additionally, after completing the SYCLomatic migration, we added a host interface call. To reduce the number of SYCL kernels used, we added device calls to the next kernel (generate_paths_kernel). This way, we reduce the number of kernels needed and remove the need for extra memory to store random numbers, as we use these numbers as soon as they are generated.

Applying the Device API interface capability, we obtain up to 2.13 times performance speed-up compared with traditional host interface calls. We observed a ~15% performance improvement for the overall application speed-up.

Figure 1 shows the performance scalability of American Monte Carlo benchmark results depending on the number of calculated paths. The “RNG & Next Kernel Speedup” curve shows the performance increase of combining RNG device API and generate_paths_kernel usage compared to the Host API version using RNG Host interface calls and generate_paths_kernel separately. “Full Benchmark Workload Speedup” shows overall the benchmark benefits of using RNG Device API compared to RNG Host API usage.

Figure 1. American Monte Carlo Scalability Results.

European Monte Carlo Options Pricing Model

Let us also consider the performance of the European Monte Carlo Options Sample that is available on the oneAPI Samples GitHub. Replacing the Device API with the Host and modeling for a duration of 1 year, the option_years variable can be set to 1. After this consideration we can easily replace device calls with a host call as a separate SYCL kernel. However, we need extra memory to store generated numbers.

Applying those changes, we found that operating memory on the GPU is insufficient to store ~100 000 000 000 double precision numbers. So, we decided to reduce the number of paths to 16000, so we let the code run successfully.

Figure 2 shows how the RNG Device API usage allows users to obtain a 3.69 times performance improvement for the whole European Monte Carlo benchmark compared to RNG Host API.

Use oneMKL RNG Device API Routines to Your Advantage

Device API allows users to achieve an essential performance boost compared to Host interfaces but requires much more coding and knowledge about specific domain implementations. It is also a more flexible API since users can control the parameters of SYCL parallel routines.

Results in this article can be applied to other applications (not only financial) that have calculations of random numbers on the critical performance path.

Addressing challenges is a regular process for Intel® Compiler and Intel® oneMKL software development teams. To make Intel Software better for our customers. We are constantly looking for both more efficient algorithms and better optimization techniques.

To get started now, look at the Intel® oneAPI Math Kernel Library, the oneAPI Math Kernel Library (oneMKL) Interfaces project, and SYCLomatic.

Additional Resources

- Intel® oneAPI Math Kernel Library

- oneAPI Math Kernel Library (oneMKL) Interfaces

- SYCLomatic

- Intel® DPC++ Compatibility Tool

- Unified Acceleration (UXL) Foundation

Notices & Disclaimers

Performance varies by use, configuration, and other factors. Learn more on the Performance Index site.

Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See backup for configuration details. No product or component can be absolutely secure.

Your costs and results may vary.

Intel technologies may require enabled hardware, software or service activation.

© Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

*Other names and brands may be claimed as the property of others.